Full documentation and demo can be found at

deep.tiri.xxx

Can technology be trained to deliberately commit mistakes in order to help spark creative improvisation in performers and artists?

Abstract

We try to build machines to look as life-like as possible. Computers today can learn and be trained just like humans. What is a human? What determines if a being is human?

Humans make mistakes, this translates to machines as well. As a user of technology, I have found that machines can glitch and run into errors often. Can these errors be a sign of humanity within machines?

The study of cybernetics – intelligent learning systems that study and learn from feedback and mistakes within their code. “Feedback” is the communication between two things, it is a process of learning and evolving. How can I utilize this process within my own artistic creative process?

As a performer, things have gone wrong almost every time I have performed. I have even created a machine to perform predictably, yet things will still go wrong. Does this mean its a “live performance”? Things that you can not feel in the pre-recording process when the machine or other outside factors go wrong, I have learned to embrace machine malfunctions as a new form of improvisation. To solve a problem onstage is a good exercise for performers and artists to practice improvisation. Improvisation creates an unpredictable, original, and unique new art form.

For my thesis, I created bots to be able to listen and respond to humans, using machine learning to generate new narrative techniques in unpredictable ways. The goal of my performance is to improvise on top of mis-informed and mis-trained programs using human vocals to add harmony. In doing so, performers will be required to listen carefully to the chaotic bots, then sing and respond along with bots.

Further reading

As a broken English speaker, I found that the way I speak makes everyone (human or/and machine) misunderstand my mind and create other meanings of the conversation. Those things for me are fascinating and can create an art piece. During my performances, my collaborators, my machines, and I often commit mistakes live. This forces a creative impulse that creates unexpected and unique experiences.

One book that inspired my journey was The Most Human Human by Brian Christian. In this book, Brian explains: “The thing that makes human speech different from machine speech is emotional and social intelligence. Humans are not just funny but also moody, aggressive or sometimes can feel nothing”. Another article AI’s Language Problem By Will Knight states “There’s an obvious problem with applying deep learning to language. It’s that words are arbitrary symbols, and as such they are fundamentally different from imagery. Two words can be similar in meaning while containing completely different letters, for instance; and the same word can mean various things in different contexts.” “Humans are terrible at computing with huge data, but we’re great at abstraction and creativity.” How can I design performance to show this difference between human and machine? How can I put my character into the bot and create something weird and fun from it? How can I create a speaking machine to generate mistakes freely?

From my research, most companies creating voice interfaces run into a problem if they don’t know how long a person will speak and when they will start speaking. This problem easily creates errors in voice interface.

Can I use these errors artistically instead of the machine simply discarding them?

For my performance, I took these discarded fragments from voice interfaces and produced creative responses in the form of three distinct bots.

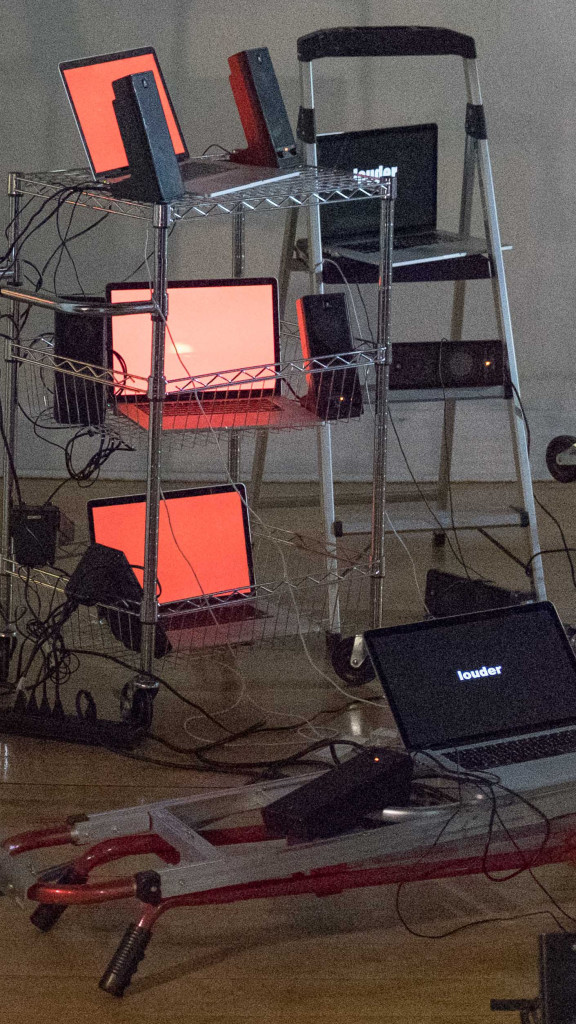

Three types of bots:

Vanity bot – It always repeats whatever it heard including itself. I used P5.speech written by R. Luke DuBois for speech synthesis and speech recognition.

After closely studying opera scripts, Bot Learning Opera will respond with whatever it thinks has a similar contextual value. The bot uses the ML.5 js algorithms to generate it’s understanding model.

Rhyming bot – I used Pronouncing python library written by Allison Parrish to generate rhyme words. It will reply by rhyming the word it heard.

All bots have random speed and pitch. These spark machine errors while listening and contribute to the performance sound composition.

All Three bots

Design of performance:

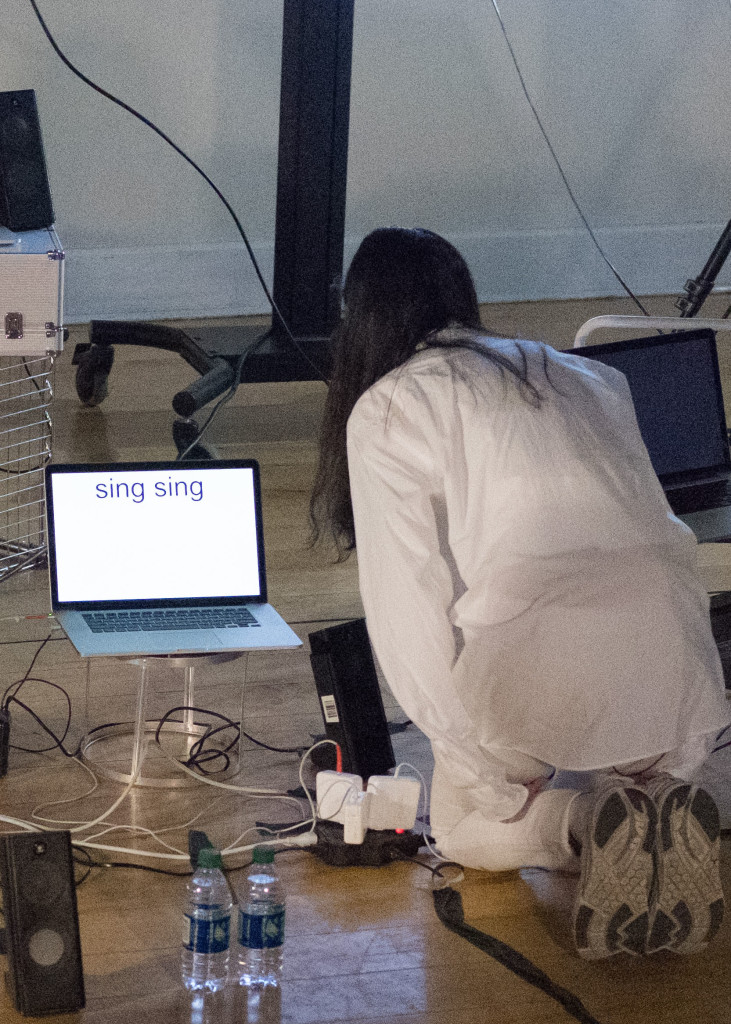

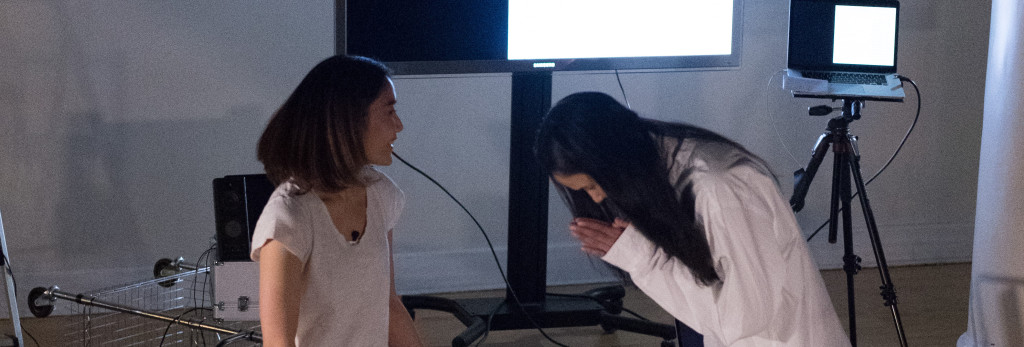

I created my role as a technician who knows how to fix the machine. When the bots stop listening, I walk to them and start speaking with them. I also have January Punwattana as a collaborator who is a professional singer and actress. Her role is my most successful bot, she only sings in a soprano voice. Her reaction is the same as the other bots. She only repeats when she hears a word. I chose to use a soprano in my performance because machines tend to mishear very high or low pitches. In addition to sound composition, the soprano also contributes to the misunderstanding feedback loop in the performance.

References

Knight, Will. (2016, August 9). AI’s Language Problem. Retrieved from https://www.technologyreview.com/s/602094/ais-language-problem/

Turing, Alan Mathison (1950) Computing Machinery and Intelligence. Mind 49: 433-460. Retrieved from https://home.manhattan.edu/~tina.tian/CMPT420/Turing.pdf

Stolcke, Andreas and Droppo, Jasha. Comparing Human and Machine Errors in Conversational Speech Transcription. Microsoft AI and Research, Redmond, WA, USA. Retrieved from https://www.microsoft.com/en-us/research/wp-content/uploads/2017/06/paper-revised2.pdf

Christian, Brian. The Most Human Human: What Talking with Computers Teaches Us About What It Means to Be Alive. New York: Doubleday, 2011. Print.

Perloff, Marjorie and Craig Dworkin, Eds. The Sound of Poetry / The Poetry of Sound Chicago: The University of Chicago Press, 2009. Print.

Bogart, Anne and Landau, Tina. The Viewpoints Book: A Practical Guide to Viewpoints and Composition. Theatre Communications Group, 2005. Print.

Neumark, Norie, Gibson, Ross and Leeuwen, Theo van. VOICE: Vocal Aesthetics in Digital Arts and Media. Cambridge, MA: MIT, 2010. Print.

>