deepTalking2.0

A live poem generated from misunderstand

Continued show from my thesis deepTalking

I wanted to show the capability of human compare to machine

From my thesis question:

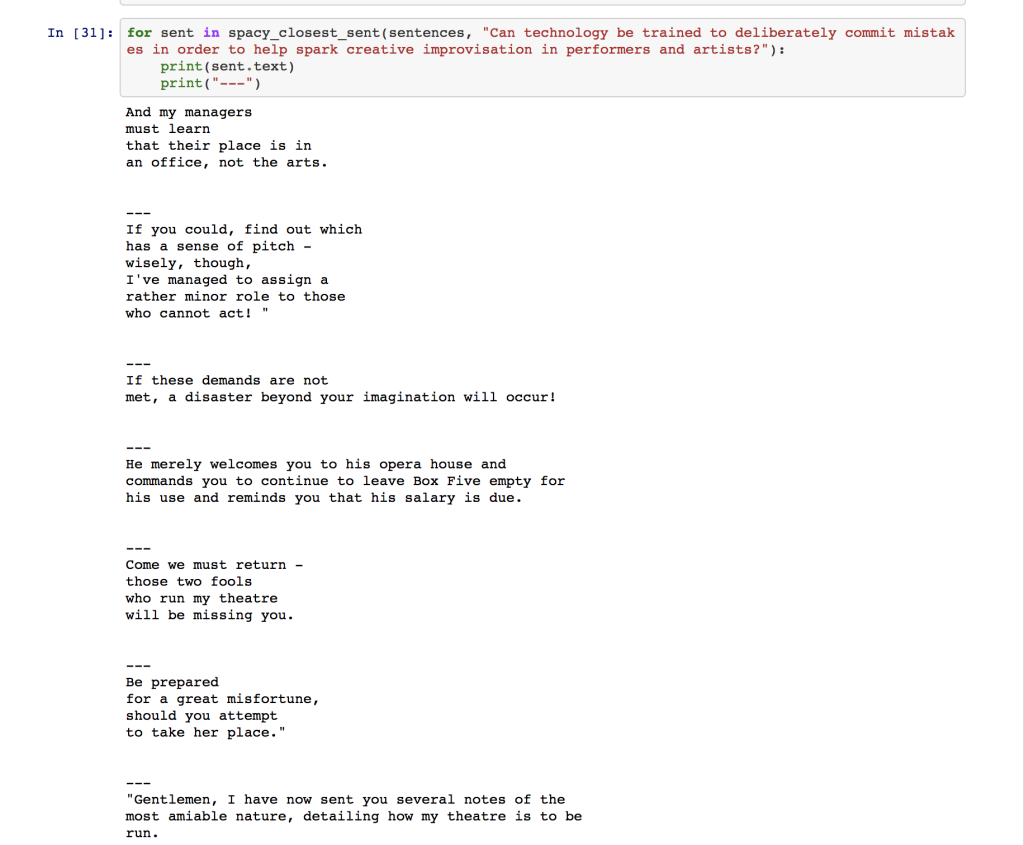

Can technology be trained to deliberately commit mistakes

in order to help spark creative improvisation in performers and artists?

I am a performer. From my experience, Things have gone wrong almost every time I have performed. To solve a problem on stage is a good exercise for performers to practice improvisation

Also, an improvisation shows the difference between human and machine. Both of us have an error and make mistake. From my research, Humans are terrible at computing with huge data, but we’re great at abstraction and creativity. Humans also have emotional and social intelligence. That’ why we have a capability of seeing a very little difference things

Also, As you can tell, I am a broken English speaker, I found that the way I speak makes everyone (human or/and machine) misunderstand my mind and create other meanings of the conversation. Those things for me are fascinating

To continue my first show I designed the new way to interact. I created

deepScription

Instead of telling audiences why I am doing this in normal English language. I wanted to show them how I wrote this piece using machine thought(/process). I used wordvecter spacy to generate a description. I used opera script to train it. It’s like when you go to see opera, you don’t really understand what’s going on but you enjoy the music and the ability of performers

When the bots are idle, I turned to the audience and read deepscription, Then turn back again and open the sound from laptop and read whatever they show. When the bots are idle, Turn back to the audience and do it again

From my voice interface research, most companies creating voice interfaces run into a problem if they don’t know how long a person will speak and when they will start speaking. This problem easily creates errors in voice interface. From this point, I made bots that has a random speed and pitch to create an error. Also If this is a real person, Is that mean they are not a good listener? From that idea I created three distinct bots.

Three types of bots:

Vanity bot – It always repeats whatever it heard including itself. I used P5.speech written by R. Luke DuBois for speech synthesis and speech recognition.

After closely studying opera scripts, Bot Learning Opera will respond with whatever it thinks has a similar contextual value. The bot uses the ML.5 js algorithms to generate it’s understanding model.

Rhyming bot – I used Pronouncing python library written by Allison Parrish to generate rhyme words. It will reply by rhyming the word it heard.

All bots have random speed and pitch. These spark machine errors while listening and contribute to the performance sound composition.

All Three bots

Full documentation and demo can be found at

deep.tiri.xxx