Can technology be trained to deliberately commit mistakes in order to help spark creative improvisation in performers and artists?

Abstract

We try to build machines to look as life-like as possible. Computers today can learn and be trained just like humans. What is a human? What determines if a being is human?

Humans make mistakes, this translates to machines as well. As a user of technology, I have found that machines can glitch and run into errors often. Can these errors be a sign of humanity within machines?

The study of cybernetics – intelligent learning systems that study and learn from feedback and mistakes within their code. “Feedback” is the communication between two things, it is a process of learning and evolving. How can I utilize this process within my own artistic creative process?

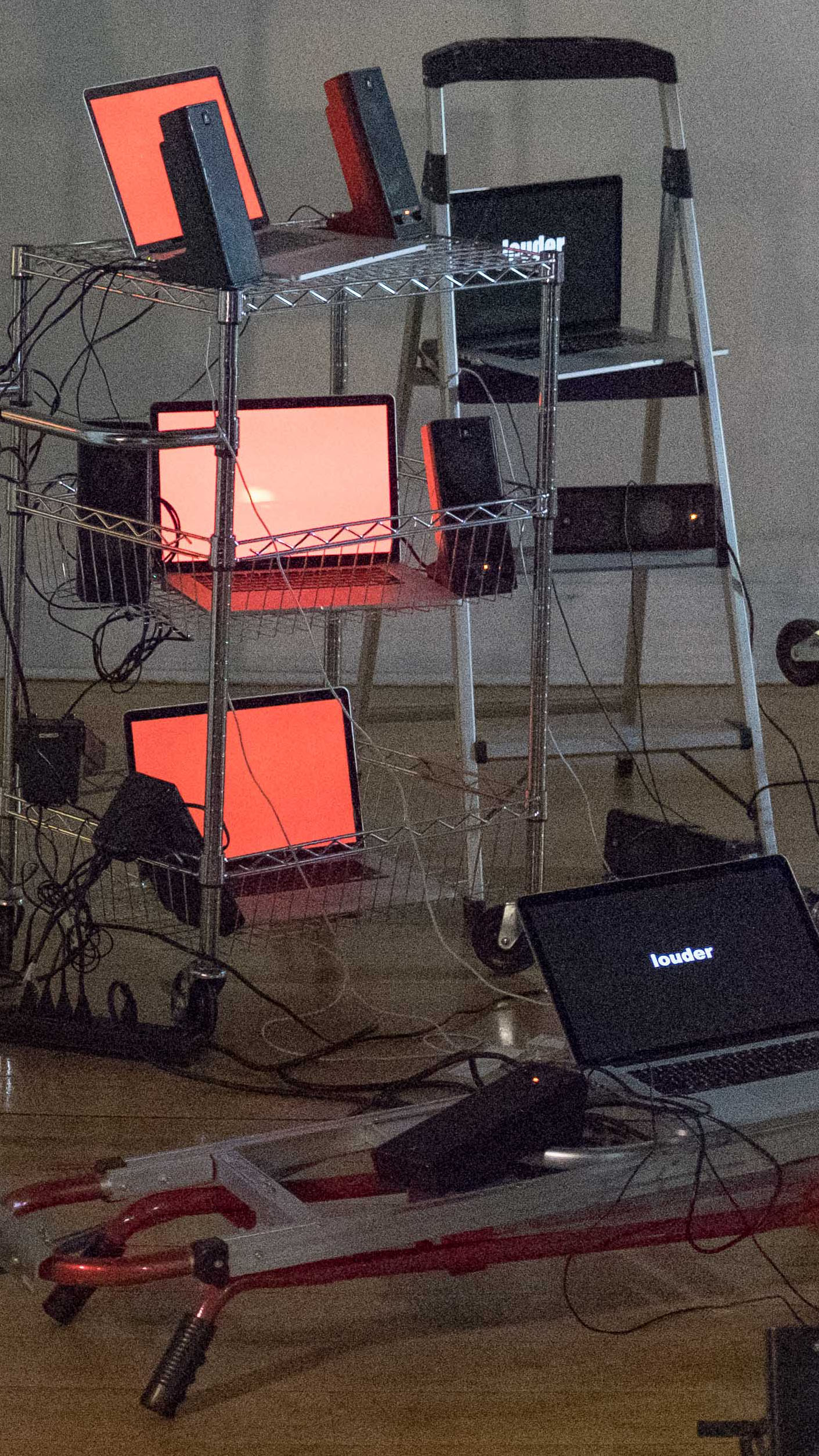

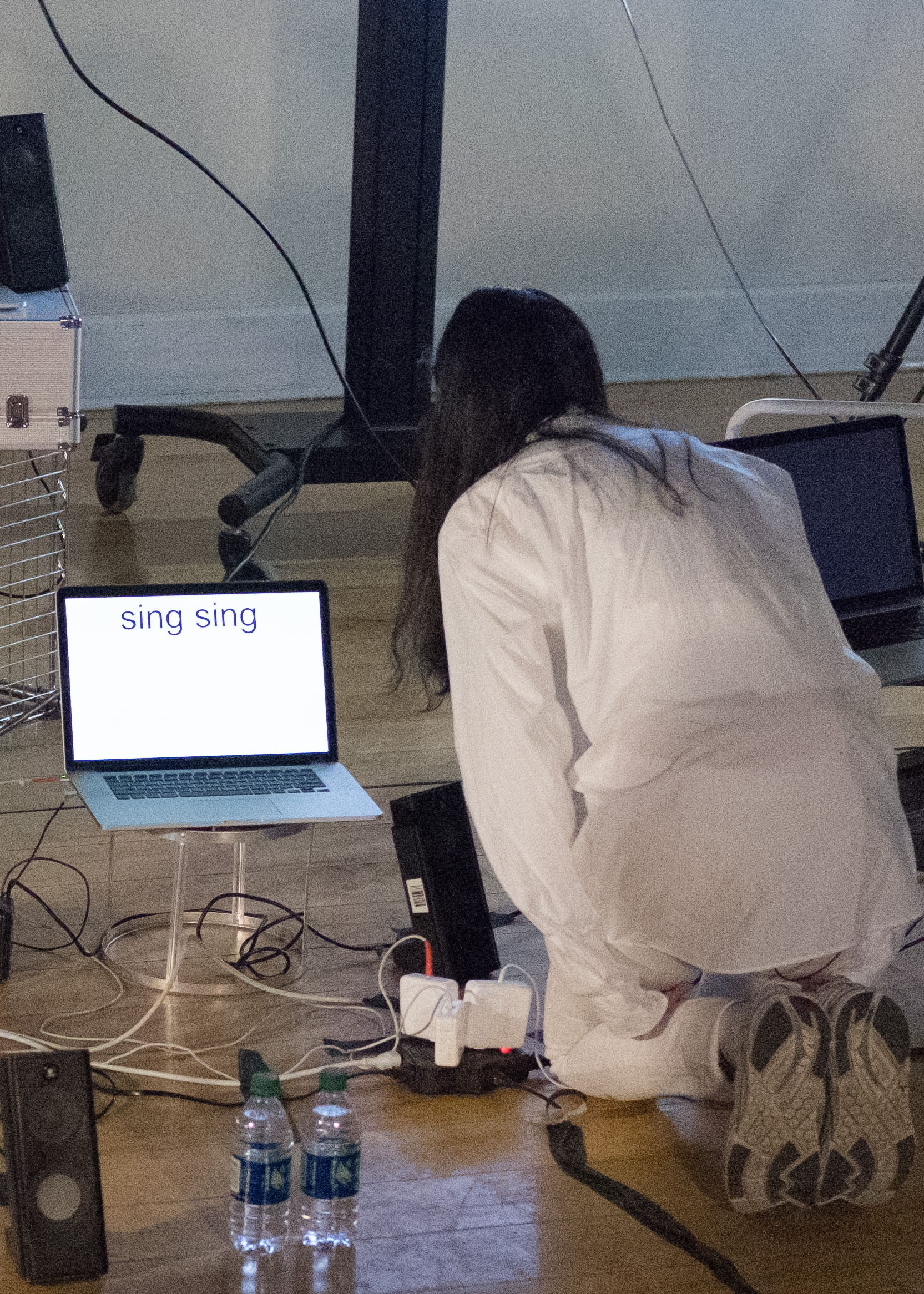

As a performer, things have gone wrong almost every time I have performed. I have even created a machine to perform predictably, yet things will still go wrong. Does this mean its a “live performance”? Things that you can not feel in the pre-recording process when the machine or other outside factors go wrong, I have learned to embrace machine malfunctions as a new form of improvisation. To solve a problem onstage is a good exercise for performers and artists to practice improvisation. Improvisation creates an unpredictable, original, and unique new art form.

I created bots to be able to listen and respond to humans, using machine learning to generate new narrative techniques in unpredictable ways. The goal of my performance is to improvise on top of mis-informed and mis-trained programs using human vocals to add harmony. In doing so, performers will be required to listen carefully to the chaotic bots, then sing and respond along with bots.

In Collaboration with Jann Punwattana

Further reading can be found at

Photos by Leon Eckert